This offers IT a highly relevant metaphor.

Many of the problems that undermine IT effectiveness are self-inflicted. Just as lifestyle decisions have a tremendous impact on quality of life, how we work has a tremendous impact on the results we achieve. If we work in a high-risk manner, we have a greater probability of our projects having problems and thus requiring greater maintenance and repair. Increased maintenance and repair will draw down returns. The best people in an IT organisation will be assigned to remediating technical brownfields instead of creating an IT organisation that drives alpha returns. That assumes, of course, that an IT organisation with excessive brownfields can remain a destination employer for top IT talent.

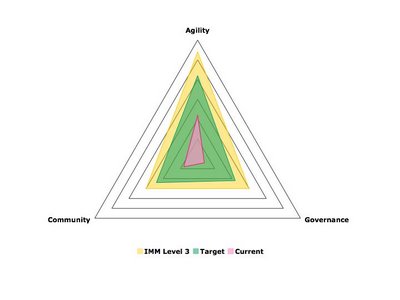

This suggests strongly that “how work is done” is an essential IT governance question. That is, IT governance must not be concerned only with measuring results, but also knowing that that the way in which those results are achieved is in compliance with practices that minimise the probability of failure.

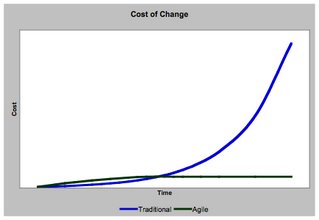

This wording is intentional: how work is performed reduces the probability of failure. If, in fact, lifestyle decisions can remove 70% of the probability that a person suffers any of 7 chronic conditions, so, too, can work practices reduce the probability that a project will fail. Let’s be clear: reducing the probability of failure is not the same as increasing the probability of success. That is, a team can work in such a way that it is less likely to cause problems for itself, by e.g., writing unit tests, having continuous integration, developing to finely grained statements of business functionality, embedding QA in the development team, and so forth. Doing these isn’t the same as increasing the probability of success. Reducing the probability of failure is the reduction of unforced errors. In lifestyle terms, I may avoid certain actions that may cause cancer, but if cancer is written into my genetic code the deck is stacked against me. So it is with IT projects: an extremely efficient IT project will still fail if it is blindsided because a market doesn’t materialise for the solution being developed. From a solution perspective, we can do things to control the risk of an unforced error. This is controllable risk, but it is only internal risk to my project.

This latter point merits particular emphasis. If we do things that minimise the risk of an unforced error – if we automate a full suite of unit tests, if we demand zero tolerance for code quality violations, if we incrementally develop complete slices of functionality – we intrinsically increase our tolerance for external (and thus unpredictable) risk. We are more tolerant to external risk factors because we don’t accumulate process debt or technical debt that makes it difficult for us to absorb risk. Indeed, we can work each day to maintain an unleveraged state of solution completeness: we don’t accumulate “debt,” mortgaged our future by needing downstream effort (such as “integration” and “testing”) that accumulates a partial solution which is alleged to be complete. Instead, we pull downstream tasks forward to happen with each and every code commit, thus maintaining solution completeness with every action we take.

One of our governance objectives must be that we are cognisant of how solutions are being delivered everywhere in the enterprise, because this is an indicator of their completeness. We must know that solutions satisfy a full set of business and technical expectations, not just that solutions are “code complete” awaiting an unmeasurable (and therefore opaque) process that makes code truly “complete.” These unmeasurable processes take time, and therefore cost; they are subsequently a black-box: we can time-box them, but we don’t really know the effort that will be required to pay down any accumulated debt. This opacity of IT is no different from opacity in an asset market: it makes the costs, and therefore the returns, of an IT asset much harder to quantify. The inability to demonstrate functional completeness of a solution (e.g, because it is not end-to-end developed) as well as the technical quality of a solution (through continuous quality monitoring) creates uncertainty that the asset that is going to provide a high business return. This uncertainty drives down the value of the assets that IT produces. The net effect is that it drives down the value of IT, just as the same uncertainty drives down the value of a security.

If the governance imperative is to understand that results are being achieved in addition to knowing how they are being achieved, we must consider another key point: what must we do to know with certainty how work is being performed? Consider three recent news headlines:

- Restaurant reviews lack transparency: restauranteurs encourage employees to submit reviews to surveys such as Zagat, and award free meals to restaurant bloggers who often fail to report their free dining when writing their reviews.2

- Some watchmakers have created a faux premium cachet: top watchmakers have been collaborating with specialist auction houses to drive up the prices by being the lead bidders on their own wares, and doing so anonymously. The notion that a Brand X watch recently sold for tens of thousands of dollars at auction increases the brand’s retail marketability by suggesting it has investment-grade or heirloom properties. That the buyer in the auction might have been the firm itself would obviously destroy that perception, but it is obfuscated from the retail consumer.3

- The credit rating of mortgage backed securities created significant misinformation in risk exposure. Clearly, a AAA rated CDO heavily laden with securitised sub-prime mortgage was never worthy of the same investment grade as, say, GE corporate bonds. The notion that what amounted to high-risk paper could be given a triple-A rating implied characteristics of the security that weren’t entirely true.

Thus, we must be very certain that we understand fully our facts about how work is being done. Do you have a complete set of process metrics established with your suppliers? To what degree of certainty do you trust the data you receive for those metrics? How would you know if they’re gaming the criteria that you set down (e.g., meaningless tests are being written to artificially inflate the degree of test coverage)? We must also not allow for surrogates: we cannot govern effectively by measuring documentation. We must focus on deliverables, and the artifacts of those deliverables, for indicators of how work is performed. A recent quote dating to the early years of CBS News is still relevant today: “everybody is entitled to their own opinion, but not their own facts.”4 Thus, IT governance must not only pay attention to how work is being done, it must take great pains to ensure that the sources of data that tell us how that work is being done have a high degree of integrity. People may assert that they work in a low-risk manner, but that opinion may not withstand the scrutiny of fact-based management. As with any governance function, the order of the day is no different than administration of nuclear proliferation treaties: “trust, but verify.”

This entire notion is a significant departure from traditional IT management. As Anatole France said of the Third Republic: “And while this enfeebles the state it lightens the burden on the people. . . . And because it governs little, I pardon it for governing badly.”5 On the whole, IT professionals will feel much the same about their host IT organisations. Why bother with all this effort to analyse process? All anybody cares about is that we produce "results" - for us, this means getting software into production no matter what. This process stuff looks pretty academic, a lot of colour coded graphs in spreadsheets. It interferes with our focus on results.

Lackadaisical governance is potentially disasterous because governance does matter. There is significant data to suggest that competent governance yields higher returns, and similarly that incompetent governance yields lower returns. In a 2003 study published by Paul Gompers, buying companies with good governance and selling those with poor governance from a population of 1,500 firms in the 1990s would have produced returns that beat the market by 8.5% per year.6 This suggests that there is a strong correlation between capable governance and high returns. Conversely, according to this report, there were strong indicators in 2001 that firms such as Adelphia and Global Crossing had significant deficiencies in their corporate governance, and that these firms represented significant investment risk.

As Gavin Anderson, chairman and co-founder of GovernanceMetrics International recently said, “Well governed companies face the same kind of market and competitor risks as everybody else, but the chance of an implosion caused by an ineffective board or management is way less.”7 The same applies to IT. Ignoring IT practices reduces transparency and increases opacity of IT operations, reducing IT returns. Governing IT so that it minimises the self-inflicted wounds, specifically through awareness of “lifestyle” decisions, creates an IT capability that can drive alpha returns for the business.

1DeVol, Ross and Bedroussian, Armen with Anita Charuworn, Anusuya Chatterjee, In Kyu Kim, Soojung Kim and Kevin Klowden. An Unhealthy America: The Economic Burden of Chronic Disease -- Charting a New Course to Save Lives and Increase Productivity and Economic Growth October 2007

2McLaughlin, Katy. The Price of a Four Star Rating The Wall Street Journal, 6-7 October 2007.

3Meichtry, Stacy. How Top Watchmakers Intervene in Auctions The Wall Street Journal, 8 October 2007.

4Noonan, Peggy. Apocalypse No The Wall Street Journal, 27-28 October 2007.

5Shirer, William L. The Collapse of the Third Republic Simon and Schuster, 1969. Shirer attributes this quote to Anatole French citing as his source Histoire des littératures, Vol. III, Encyclopédie de la Pléiade

6Greenberg, Herb. Making Sense of the Risks Posed by Governance Issues The Wall Street Journal, 26-27 May 2007.

7Ibid.